The decision by Meta, the parent entity overseeing Facebook and Instagram, to discontinue its fact-checking initiative and otherwise curtail content oversight prompts contemplation regarding the future landscape of content on these prominent social media platforms.

A cause for concern is the potential for this alteration to usher in an era of amplified climate-related falsehoods across Meta’s applications, including the dissemination of inaccurate or decontextualized assertions during periods of crisis.

Meta’s established protocols have stipulated that fact-checkers should accord priority to “viral erroneous narratives,” fabricated stories, and “demonstrably untrue declarations that are current, trending, and significant.” The company has expressly stated that this framework excludes subjective viewpoints not containing factual inaccuracies.

The corporation will be terminating its contractual arrangements with domestic U.S. third-party fact-checking entities in March of 2025. The modifications planned for U.S. users will not impact the review of fact-checked content experienced by individuals located internationally. The technology sector is currently grappling with heightened regulatory scrutiny concerning the mitigation of misinformation in various global jurisdictions, such as the European Union.

Fact-checking curbs climate misinformation

My academic pursuits involve the investigation of climate change communication. The process of fact-checking serves as a valuable mechanism for rectifying political inaccuracies, including those pertaining to climate change. The efficacy of fact-checks is influenced by a confluence of factors, such as an individual’s convictions, their ideological leanings, and their existing repository of knowledge, impacting the degree to which they are absorbed.

Identifying communication strategies that resonate with the core values of the intended recipients, coupled with the utilization of credible sources—for instance, engaging climate-conscious conservative organizations when addressing individuals with conservative political outlooks—can be instrumental. Similarly, appealing to collective societal principles, such as the imperative to safeguard future generations from harm, also proves beneficial.

Intensified heat events, episodes of inundation, and fire-prone conditions are increasingly becoming prevalent and devastating as global temperatures ascend. Extreme meteorological occurrences frequently precipitate a surge in public discourse concerning climate change on social media platforms. While social media activity tends to escalate significantly during a crisis, it subsequently diminishes rapidly.

Low-fidelity fabricated imagery, often produced through generative artificial intelligence technologies, colloquially referred to as “AI slop,” exacerbates online confusion during periods of emergency. For instance, following the consecutive hurricanes Helene and Milton last autumn, spurious AI-generated visuals depicting a young girl in distress, clutching a puppy in a boat, circulated widely on the social network X. The proliferation of unsubstantiated accounts and deceptive information impeded the Federal Emergency Management Agency’s capacity to effectively respond to the disaster.

The defining characteristic differentiating misinformation from disinformation lies in the underlying motive of the individual or entity propagating the content. Misinformation encompasses factual inaccuracies or misleading statements disseminated without an explicit intent to delude. Conversely, disinformation refers to deceptive or false information intentionally shared with the objective of misleading.

Deliberate disinformation campaigns are already in motion. In the aftermath of the 2023 wildfires in Hawaii, researchers affiliated with Recorded Future, Microsoft, NewsGuard, and the University of Maryland independently cataloged an orchestrated propaganda effort orchestrated by operatives from China targeting users of U.S. social media.

Undoubtedly, the propagation of misleading narratives and unfounded rumors across social networking platforms is not a novel phenomenon.

However, the efficacy of content moderation strategies varies considerably, and platforms are continuously adapting their approaches to addressing misinformation. For instance, X transitioned from its established rumor-verification mechanisms—which had proven effective in refuting false claims during rapidly unfolding emergencies—to a system of user-generated annotations, known as Community Notes.

False claims can go viral rapidly

Meta’s Chief Executive Officer, Mark Zuckerberg, explicitly referenced X’s Community Notes as a source of inspiration for his company’s proposed adjustments to content moderation protocols. The inherent challenge lies in the fact that erroneous assertions achieve widespread dissemination with remarkable speed.

Recent empirical investigations have indicated that the response latency of crowd-sourced Community Notes is insufficient to effectively arrest the diffusion of viral misinformation during its nascent stages online—the period characterized by the highest volume of viewership for such posts.

In the context of climate change, erroneous information is notably “persistent.” Once individuals are repeatedly exposed to falsehoods, it becomes particularly arduous to dislodge these misconceptions from their cognitive frameworks.

Furthermore, the dissemination of climate-related misinformation erodes public endorsement of scientifically established principles. Merely presenting additional factual data proves ineffective in counteracting the spread of fabricated claims regarding climate change.

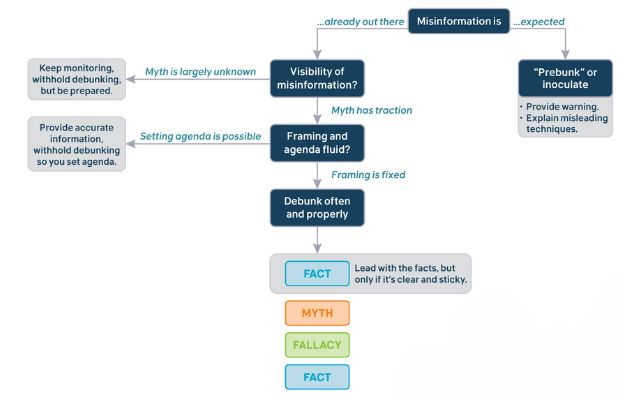

Articulating the consensus among scientific experts, affirming that climate change is an ongoing phenomenon and is directly attributable to human activities involving the combustion of greenhouse gases, can serve to equip individuals with the preparedness needed to evade misinformation. Psychological research suggests that this form of “pre-bunking” or preparatory communication is crucial.

An inoculation strategy proves effective in mitigating the impact of erroneous assertions to the contrary.

Consequently, preemptively alerting individuals to climate-related falsehoods before they achieve widespread dissemination is paramount for curtailing their propagation. This protective measure is anticipated to become increasingly challenging within Meta’s suite of applications.

Social Media Patrons as the Sole Arbiters of Truth

With the forthcoming alterations, users will assume the responsibility of verifying facts across Facebook and other Meta platforms. The most potent method for inoculating against climate disinformation involves prioritizing the dissemination of accurate information, followed by a concise denouncement of the fallacy—mentioning it only once. Subsequently, the rationale behind its inaccuracy should be elucidated, reinforcing the factual account.

During periods of disaster exacerbated by climate change, individuals urgently seek dependable and verified information to guide critical, life-saving decisions. This endeavor is already fraught with difficulties, as exemplified by the Los Angeles County’s emergency management office, which on January 9, 2025, inadvertently issued an evacuation directive to ten million residents.

In the midst of information voids during a crisis, community-driven refutations are inadequate against well-orchestrated disinformation campaigns. The conditions conducive to the rapid and unfettered proliferation of deceptive and outright false material are likely to intensify due to Meta’s evolving content moderation policies and algorithmic adjustments.

A significant portion of the American populace advocates for the tech industry to implement stricter controls on erroneous online content. However, it appears that major technology corporations are shifting the burden of fact-checking onto their user base.![]()